Dev Diary - Building for free

One of the big challenges I've had with this project was building it for free. The game runs a lot of APIs and databases to support a massively multiplayer gameworld and clouds aren't (completely) free. I have nightmares about waking up one morning to find this.

At my full-time job, I use AWS and don't really think about the cost. We spend thousands on AWS services, but an AWS bill in the thousands is the cost of doing business and optimising takes time and time is money. So we just create lambdas, EC2 machines, CloudWatch logs as far as the eye can see and just... don't worry... until the bill doubles from the previous month.

For this project, given it's mostly for fun, I don't need to have a monthly invoice for a game that has zero income. So one of the first things I looked at was how far I could get with the AWS free tier. The answer was quite a long way as long as you are prepared to compromise. You can use a lot of Lambdas and DynamoDB every month without it costing anything, so some of the game design was changed to work with the constraints of this. Particularly, the constraints of Dynamo.

Database

As an example, scouting for players would require us to find suitable players in the database, but in DynamoDB, this would likely require scanning the whole database or at least very large queries. The player database would contain thousands of players, so hundreds of users making these requests would likely run up a huge AWS bill quickly, as AWS charges by how much you read and write to the database. The design decision to get around that was to queue up scouting requests and process them all together. This would allow us to make a single database query, getting the list of players and use that result to handle multiple scouting requests at once. This actually works well in the context of the game as sending off scouts to search for players should take some time anyway.

Things quickly started to go off the rails, however. Dynamo was not the right database for the job and it wasn't as free as it seemed it would be. We store large volumes of data for each player and each fixture. These large database documents pushed a single get/set from counting as one request to multiple requests from the AWS billing perspective. Therefore, we'd already exceeded our free tier and there wasn't a huge amount we could do about it. Added to the feeling that the game mechanics were being compromised for DynamoDB led me to a decision to switch to PostgreSQL.

PostgreSQL is a much better fit for a game of this type, where a lot of the data is, by its nature, relational. A manager has a team. A team has players. Players have attributes. Teams take part in fixtures. Fixtures contribute to Leagues. All of this was very inefficient to query in Dynamo. The trouble is, PostgreSQL is not in the free tier on AWS. The next step was to self-host a database on my own VPS. This instantly made things better from a logic point of view, but the cost of this was that my performance tanked. Not because PostgreSQL itself is slow, but self-hosting on a tiny instance with a microserve architecture meant every time a lambda spins up to serve an API request, it has to make a connection, and that was slow before it can even run the query. When we're still in dev with just one user, this is acceptable but as I wanted to move into public testing this had to change.

Event Processing

I also started breaking the free tier limits on SQS. SQS is an event queue that I use to handle processing actions reliably, such as fixture processing. Twice every day, we process around 300 fixtures per gameworld. These process requests are added to a queue where a lambda reads them from the queue, processes them and, if the processing fails, adds them to a "dead letter queue" so they can be reprocessed or investigated. This stops us from being overwhelmed trying to process hundreds of fixtures all at the same time and also adds some resilience. If processing fails, we can capture the event that failed and either fix the bug and replay it or discard it. While SQS itself was fine in the free tier, in order for a lambda to read from it, it needs a subscription. The subscription is an automated polling event that checks the queue every x seconds, and you are allowed 1,000,000 of these a month. It's quite shocking how quickly you can burn through that amount with a few queues and poorly optimised intervals. Unlike the database, this actually is the right tool for the job. Large quantities of data that need to be processed quickly in parallel with good error handling so the best I could do here was increase the batch size and the poll interval so it is invoked less freqently. Some of the events have no rush to process them so a long interval is perfectly acceptable.

CloudWatch

Another thing to balance is monitoring. It's really important that if we start getting errors in our API that I know about it. Especially with events that are triggered on timed intervals, and the user wouldn't see any errors in their app.

AWS CloudWatch offers a number of services that can help here; the most obvious is logging. AWS have a pretty generous free tier for logs but it still needs to be implemented cafrefully. AWS will charge you for a certain number of events logged and also for storage of logs. By default, my infrastructure was being deployed with logs being kept "Forever". This is unnecessary and a rapid way to start running up a big AWS bill. In truth, I don't care about logs much after a week they've been logged so a 2 week retention period is a long enough time to keep these.

I still managed to blow the free tier on logging however. My match engine that computes all of the events that happened between two teams had significant amounts of debug logs in it. Given this runs twice a day for about 300 matches each time, it wasn't long before this got out of hand.

Once you've added logs, though, we need to make sure we get alerted about errors. Here I used CloudWatch alarms and hooked these up to SNS topics that would send an email to me whenever either one of my Dead Letter Queues received a failed event or whenever the word "ERROR" appeared in one of my Lambda logs. AWS will give you 10 free alarms every month, and I have around 100 lambdas producing their own logs so clearly I can't cover everything that way.

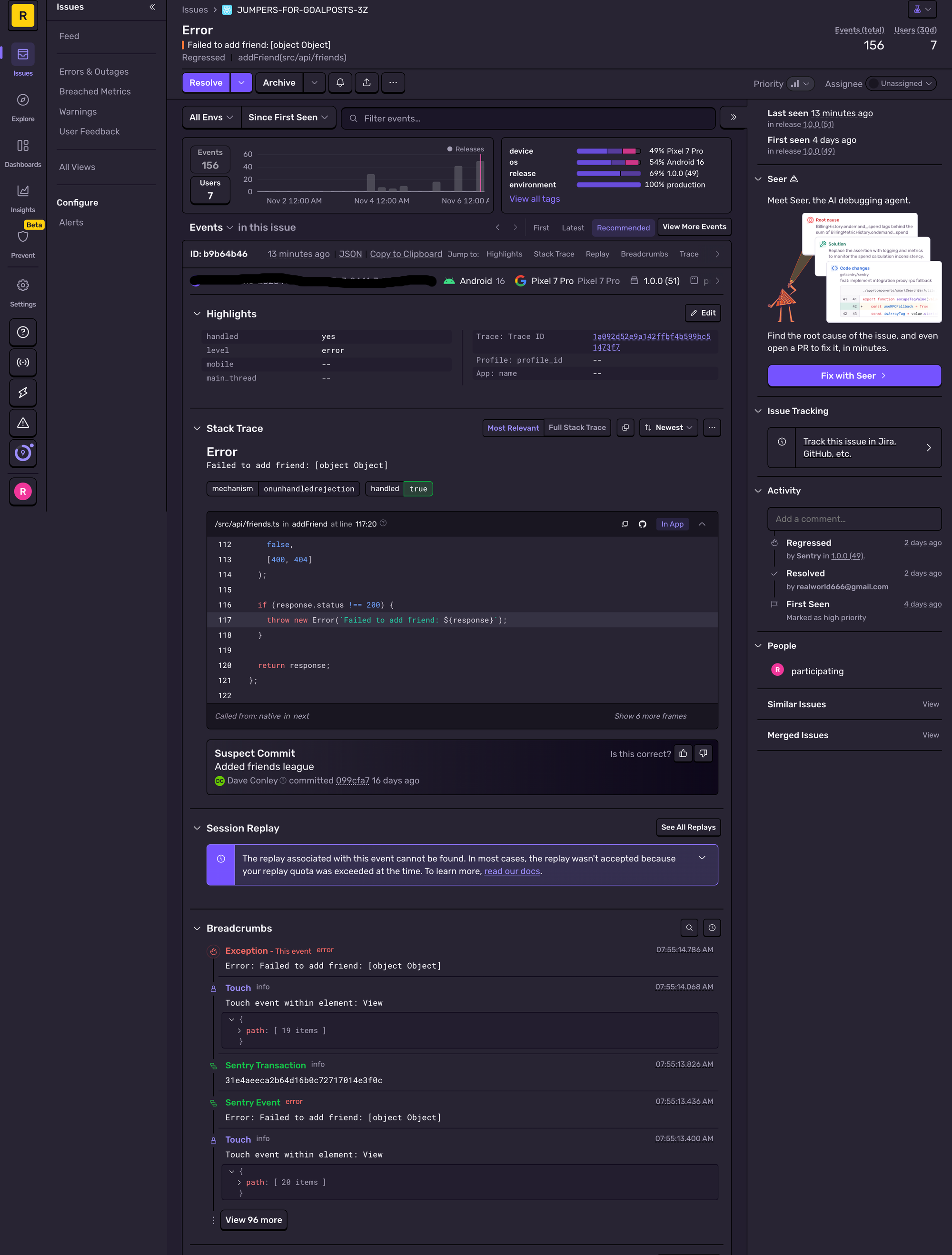

I restricted my alarms to just the lambdas that were running from triggers, like the lambda that runs match logic twice a day. For the API endpoints, I used Sentry. This is a 3rd party specialist monitoring service that has its own generous free tier. I've added this to both the mobile app and the backend to catch errors, and it will provide some really in-depth logs of the error and emails me whenever there is a new issue.

Another helpful feature in CloudWatch is Lambda Insights. This provides dozens of datapoints about the performance of the lambdas, including alerts for lambdas that were hitting the maximum memory usage or running longer than their maximum execution time. It is a good way to quickly understand if anything is under or over-provisioned. This helped me, over the space of a couple of days, identify some real silent issues in the performance of my system. Unfortunately, it is very much not free. It sets up a lot of alerts on all of your lambdas, and each one has a small cost so on a hundred lambdas, this got expensive quickly so went in the bin.

Conclusion

So, is it possible to build a game on AWS for free? Well, no. Not this one, at least, but it can be done very cheaply. My AWS bill is currently $15 a month, and this would inevitably go up if that game gets a lot of users, but hopefully, if that happens, then the income from ads and in-app purchases would go up to match that - we'll see. This still isn't costing me anything (yet) thanks to the AWS Activate program. If you're starting out on AWS it's well worth looking at this as it provides $1,000 AWS credits for free. That, with the free tier should be enough to get a project to release and run it for a few months to see if it's viable. It also provides a buffer to make a mistake on costs without hurting your own pocket. This program also gives access to other benefits such as free $300 Supabase credit which is providing me with free database hosting for 12 months,